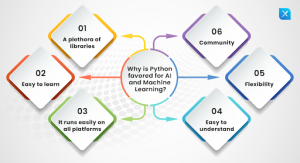

Python has become one of the most popular programming languages used in machine learning. It specifically offers a range of powerful features that make it easy to develop complex and sophisticated models. In this blog post, we’ll precisely provide nine tips and tricks to help you master machine learning with Python. We’ll look at the basics of Python and cover topics such as data pre-processing, feature selection, and model evaluation. With these tips and tricks, you’ll be able to use Python to create more accurate and efficient machine learning models. So let’s dive into our nine tips and tricks for mastering machine learning with Python!

1) Data Pre-Processing

Before diving into the world of machine learning, it’s essential to understand the importance of data pre-processing. This step involves preparing the raw data by cleaning, transforming, and reorganizing it to make it more accessible for the machine learning algorithm to interpret. Data preprocessing is essential because the accuracy of your model’s predictions is heavily reliant on the quality of the input data.

One crucial aspect of data pre-processing is dealing with missing data. Most real-world data sets contain missing values, and machine learning algorithms struggle to process incomplete data. Therefore, it’s crucial to address this issue before training your model. Several methods can be used to handle missing data, including dropping the columns or rows with missing values or filling them in using imputation techniques.

Another vital step in data preprocessing is feature scaling. It involves scaling the data features to a similar range. The aim is to avoid some features dominating the model’s predictions due to their higher values. This scaling is often accomplished through normalization or standardization techniques.

Lastly, data pre-processing involves dealing with categorical data, which is information that falls into different categories. Since machine learning algorithms can only interpret numerical data, it’s essential to convert categorical data into numerical form. This conversion is known as one-hot encoding, which assigns a binary value of 1 or 0 to each category. One-hot encoding is an effective method to handle categorical data since it prevents the machine learning algorithm from assuming a numerical relationship between the categories.

2) Training and Test Sets

In order to create a successful machine learning model with Python, you need to properly divide your data into training and test sets. But what exactly does that mean and why is it so important?

When you first begin your machine learning project, you’ll likely have a large dataset with various variables that you believe are important for predicting your desired outcome. However, you can’t simply feed all of that data into your model and expect it to perform well. That’s where training and test sets come into play.

Your training set is a subset of your original dataset that you’ll specifically use to train your machine learning model. Essentially, you’ll use this data to teach your model how to make predictions based on the given variables. Your training set should be a random sample of your overall data, and should contain enough observations to properly train your model without overfitting.

Your test set is another subset of your original dataset, but this time it’s precisely used to evaluate the performance of your model. You should never use your test set for training – doing so will bias your results and make it difficult to know how well your model would perform on new data. Your test set should be separate from your training set and also contain enough observations to properly evaluate your model.

The importance of splitting your data:

Splitting your data into training and test sets is crucial because it allows you to evaluate how well your model performs on data it hasn’t seen before. If you don’t have a separate test set, you won’t know how your model will perform on new, unseen data – which is the whole point of creating a machine learning model!

Additionally, properly splitting your data helps you avoid overfitting. Overfitting occurs when your model is too closely fit to your training data, and therefore doesn’t generalize well to new data. Splitting your data into separate training and test sets helps ensure that your model is not overfitting and can generalize well to new data.

Overall, splitting your data into training and test sets is an essential step in the machine learning process. It helps you properly train and evaluate your model, and ensures that your model can generalize well to new, unseen data. With proper training and evaluation, your machine learning model can become a powerful tool for predicting and solving complex problems in various fields.

3) Algorithms

In the world of machine learning, algorithms are the heart and soul of any project. They are essentially the mathematical models that make it possible for a computer to learn from data, identify patterns, and make predictions.

There are countless algorithms precisely available for machine learning projects, each with its own strengths and weaknesses. Choosing the right algorithm for your project can be a challenge, but it is also an opportunity to explore different approaches and find the one that best suits your needs.

Some popular algorithms for machine learning specifically include linear regression, logistic regression, decision trees, random forests, support vector machines, and neural networks. Each of these algorithms has its own unique characteristics and is suited to specific types of data and problems.

Linear regression is a simple but powerful algorithm that can be used for predicting numerical values based on a set of input variables. Logistic regression is a variation of linear regression that is used for predicting binary outcomes (such as whether a customer will purchase a product or not).

Decision trees are a popular algorithm for classification tasks, such as identifying whether a customer is likely to buy a product based on their demographic and behavioral data. Random forests are a more complex version of decision trees that use multiple decision trees to generate more accurate predictions.

Support vector machines are another popular algorithm for classification tasks, and are particularly effective for problems with complex decision boundaries. Finally, neural networks are a family of algorithms that are inspired by the structure and function of the human brain. They are highly flexible and can be used for a wide range of tasks, from image recognition to natural language processing.

Choosing the right algorithm for your project is just the first step – you also need to fine-tune the algorithm to achieve the best possible results. This involves adjusting hyperparameters (such as learning rate and regularization strength) to optimize performance on your specific dataset.

Ultimately, the key to success in machine learning is not just choosing the right algorithm, but also understanding the underlying principles and concepts that drive these algorithms. With the right knowledge and tools, you can unleash the power of machine learning to solve complex problems and achieve unprecedented levels of insight and accuracy.

4) Model Evaluation

Once you have generated your machine learning model, it is necessary to assess its accuracy and precision before applying it to real-world data. Model evaluation is a fundamental step for making sure that your model is reliable and efficient. There are various strategies for evaluating your machine learning model, and this part will provide information on some of them.

Cross-validation is one of the most prevalent approaches to model evaluation. It involves separating your dataset into different subsets and applying each one for both training and testing the model. Cross-validation enables you to assess the solidity of your model and ensures that it is not overly fitted to the training data.

In addition, a confusion matrix is an important tool for assessing the model’s effectiveness. It gives you insight into true positives, true negatives, false positives, and false negatives. By analyzing this data, you can recognize if your model is exact or if it needs modifications.

Moreover, ROC curves and AUC scores are useful for evaluating the model. ROC curves plot the rate of true positives to false positives at a range of classifying thresholds. AUC scores, on the contrary, measure the area underneath the ROC curve and assist you in understanding the general performance of your model.

Finally, precision-recall curves are helpful in assessing the performance of your model. They illustrate the balance between precision (how many predicted positives are true positives) and recall (how many real positives are predicted as positives). By looking into the precision-recall curve, you can identify if your model is precisely distinguishing the data.

5) hyperparameter tuning

When it comes to machine learning, the selection of the right algorithm is just the tip of the iceberg. To make sure that the algorithm performs at its optimal level, one needs to tune the hyperparameters.

But what are hyperparameters? These are the parameters of an algorithm that are not learned through training but rather set manually. Precisely, think of them as knobs and levers that can be tweaked to enhance the performance of the model.

Specifically, here are some tips for effective hyperparameter tuning in Python:

- Start with default settings: Most machine learning libraries come with default hyperparameters, which are usually a good starting point. You can train your model with these settings and then fine-tune the hyperparameters as needed.

- Understand the algorithm: Before you start tuning the hyperparameters, make sure that you understand how the algorithm works. This will give you a better idea of which hyperparameters to focus on.

- Use a grid search: A grid search is a popular method for hyperparameter tuning. Precisely, it involves defining a grid of hyperparameter values and training the model for each combination of values. You can then select the combination that gives the best performance.

- Use a random search: While a grid search is a systematic approach to hyperparameter tuning, it can be time-consuming if there are a large number of hyperparameters. Specifically, a random search is a more efficient method that involves randomly selecting hyperparameters from a defined range.

- Use cross-validation: Cross-validation is a technique for precisely evaluating the performance of a model by splitting the data into multiple subsets. Specifically, this can help you avoid overfitting and ensure that the hyperparameters generalize well to new data.

Hyperparameter tuning is a precisely crucial step in the machine learning process. By fine-tuning the hyperparameters, you can achieve better performance and create models that are more accurate and reliable. Specifically, with the tips and tricks outlined above, you can master hyperparameter tuning in Python and take your machine learning skills to the next level.

6) Feature Selection

One of the most vital aspects of machine learning is the accurate choice of features to construct your models. Features can be defined as the qualities or characteristics of your data set which you will use to teach your model. Picking the suitable features can either improve or damage your model’s accuracy and proficiency.

Python has multiple feature selection approaches available. These approaches are developed to detect the most crucial features in your data set and discard any irrelevant or redundant ones.

Below are some of the most regularly used feature selection methods in Python:

- Correlation-Based Feature Selection (CFS): This technique operates by picking the features that have the maximum correlation with the target variable. The concept is to locate the features that are most likely to have a direct effect on the target variable.

- Recursive Feature Elimination (RFE): RFE works by recursively removing features from your data set until only the most vital ones are left. The idea is to train your model on the diminished data set and observe how it carries out.

- Principal Component Analysis (PCA): PCA is a dimensionality decrease technique that can assist you in discovering the most essential features in your data set. It operates by changing your data set into a brand-new set of variables that are unrelated to each other.

- Mutual Information (MI): MI is a method that assesses the amount of information that one feature provides about the target variable compared to all the other features. It aids you in finding the features that are most applicable to your problem.

- Lasso Regression: Lasso regression is a linear regression technique that punishes the magnitude of the regression coefficients. It can assist you in detecting the features that have the strongest connection with the target variable.

When it comes to feature selection, there’s no definitive solution. You need to pick the technique that is the most suitable for your specific problem and data set. You may need to experiment with different techniques and check how they perform before settling on one.

Feature selection is a key element of the machine learning process, and it can have a considerable effect on the functioning of your models. By picking the correct features, you can raise the accuracy, velocity, and proficiency of your models. With Python, you have access to a range of strong tools and techniques that can assist you in understanding the art of feature selection.

7) Data Visualization

Data visualization is an essential aspect of machine learning. It helps you understand the data you’re working with, identify trends, and identify outliers. In this section, we’ll discuss how to create beautiful and effective data visualizations using Python.

Tip #1: Know your data

Before creating a data visualization, it’s essential to understand your data thoroughly. You need to know the data’s size, format, and what information it contains. By understanding the data, you can determine what types of visualizations will be most effective.

Tip #2: Choose the right visualization

There are many types of data visualizations available, and each has its advantages and disadvantages. For instance, a scatter plot may be more effective for analyzing correlations, while a bar chart may be better for comparing categorical data. Choose a visualization that best suits your data and your goals.

Tip #3: Simplify your visuals

Cluttered visualizations can be overwhelming and confusing. You need to keep your visualizations simple and clear, highlighting only the essential information. By simplifying your visuals, you can make your data more accessible to your audience.

Tip #4: Use colors effectively

Colors can help you highlight trends and patterns in your data, but you must use them effectively. Choose a color scheme that contrasts well, avoiding colors that are too similar or difficult to distinguish. Use colors consistently throughout your visualization to avoid confusion.

Tip #5: Tell a story with your data

Your visualization should tell a story, highlighting the key insights you’ve uncovered through your data analysis. It should have a clear and concise narrative that your audience can understand quickly. By telling a story, you can engage your audience and make your data more compelling.

Tip #6: Use interactive visualizations

Interactive visualizations allow your audience to explore your data on their own, making the experience more engaging. They can click on different parts of the visualization to reveal additional information, making the visualization more informative.

Tip #7: Test your visualizations

Before sharing your visualizations with your audience, test them with a small group of people to ensure that they are easy to understand and effective. Take note of their feedback, making adjustments as needed.

Tip #8: Share your visualizations

Finally, share your visualizations with your audience in a format that is easy to understand. You can use PDFs, images, or interactive web pages to share your data. The goal is to make your data accessible to your audience, helping them understand your insights.

8) Ensembling

Ensembling is a powerful technique used in machine learning to improve the accuracy and robustness of models. It involves combining the predictions of multiple models to create a final prediction that is more accurate and reliable than any of the individual models.

The idea behind ensembling is that while individual models may make mistakes, combining their predictions can lead to more accurate results. This is because different models may be better at capturing different aspects of the data or may be less sensitive to certain types of noise.

One common way to ensemble models is through a technique called bagging. This involves training multiple instances of the same model on different subsets of the data, and then combining their predictions through averaging or voting.

Another popular ensemble technique is called boosting. This involves training a sequence of models where each subsequent model is trained on the errors made by the previous models. This way, the final model is better able to capture the underlying patterns in the data.

Ensembling can be particularly useful when dealing with complex datasets or when using algorithms that are prone to overfitting. It can also be used to improve the performance of models that have similar levels of accuracy but make different types of errors.

In order to successfully ensemble models, it is important to carefully select the individual models that will be combined. It is also important to consider the potential trade-offs between accuracy, interpretability, and computational resources when choosing an ensemble method.

In addition to improving accuracy, ensembling can also provide valuable insights into the underlying patterns in the data. By combining the predictions of multiple models, it is often possible to identify areas of the data that are particularly difficult to predict or that contain important features.

Overall, ensembling is a powerful tool in the machine learning toolkit. By combining the predictions of multiple models, it is possible to improve accuracy, robustness, and gain valuable insights into the underlying patterns in the data. Whether you are dealing with complex datasets or simply looking to improve the accuracy of your models, ensembling is a technique that is well worth exploring.

9) Saving and Loading Models

Once you’ve trained a machine learning model, the next step is to save it so you can use it in the future. Saving models is crucial, especially if you’ve spent time and resources in developing it. Precisely, Python provides a simple way to save and load machine learning models, making it easy for you to store and access them anytime.

Saving a model is an essential step that you must do after you train it. It will allow you to re-use the model without having to train it again. Python specifically makes it simple to save a machine learning model using the “pickle” module. The pickle module allows you to convert a model into a byte stream, which can be saved to disk.

Loading a saved model is just as easy as saving it. Python’s “pickle” module also enables you to load the saved model from the disk. You can then precisely use it to make predictions on new data.

But wait, there’s more! Once you have a saved model, you can deploy it into a production environment. For example, you can host it on a web server and make predictions via an API. Precisely, this is a critical step in the development of your machine learning project, as it will enable you to scale and monetize it.

In addition to pickle, there are many other libraries available for saving and loading models. For example, the “joblib” module in Python is an alternative to pickle. It’s specifically useful when you’re dealing with large models or if you’re running out of memory when trying to save a model. The joblib module can also compress models and save them as a file, making them easier to store.

Conclusion

Congratulations! You have taken an essential step towards comprehending machine learning with Python. With the advice and tips given in this blog post, you are equipped to develop a reliable machine learning process that can manage data, form models, and generate predictions. Mastering machine learning is an ongoing mission though. There’s always more to learn, and contemporary tools and approaches are regularly emerging. It is consequently significant to hire AI developers in India who are professionals in machine learning. These developers can aid you to remain updated with the latest developments and utilize them for your company’s special needs. Thus, if you are ready to upgrade your machine learning assignments, now is the moment to start hunting for the greatest Python developers out there. With their support, you can continue honing your machine learning skills and reach new heights in data science.